Chatting with Magisterium AI

The Possibilities, Problems, and Perils of Catholic AI, and a Daring Jailbreak...

I had a chance to play with Magisterium AI, the large language model (LLM) designed to answer questions about Catholic doctrine. I explain the background and my theoretical concerns with the program first, but if you want to jump ahead to my actual experiments with Magisterium AI, scroll down. Read to the end to find out how I ended up “jailbreaking,” or hacking, the AI to get it to do something it was programmed not to do.

Over the summer, the U.S.-based tech company Longbeard released to the public the artificial intelligence (AI) program Magisterium AI. Similar to “large language models” (LLMs) like OpenAI’s ChatGPT and Google’s Bard, Magisterium AI is “trained” on a huge database of written documents and programmed to analyze those documents in order to answer queries from human users. Unlike other LLMs, however, Magisterium AI is trained using official documents of the Catholic Church and is designed specifically to answer questions regarding the Catholic faith.

According to the National Catholic Register:

Currently experimental, [Longbeard founder and CEO Matthew] Sanders told the Register that Magisterium AI is aimed primarily at formators and teachers of the faith, helping priests to enrich their homilies, facilitate catechism classes and to assist parents in catechizing their children.

Among its services, Magisterium AI’s creators say it can answer “any questions” on Church teaching, practices or other topics, helping to “explain complex theological, philosophical, and historical concepts in simple, understandable language.”

It can also “provide contextual information” on the Church’s history, “helping users understand why the Church teaches what it does,” as well as generate theological reflections, summarize Church documents, and be used to create educational resources.

I first mentioned Magisterium AI in an article late last month and expressed skepticism about the program, arguing that it was unlikely to be able to deliver on some of the promises made by its boosters and that it is premised on a faulty theological understanding of the Magisterium and Tradition. Most of the reporting on Magisterium AI, such as the National Catholic Register article already cited, the Religion News Service article I relied on in my earlier post, and an article at Crux, simply repeat the claims of its boosters without engaging in much critical inquiry.

I decided to test out Magisterium AI. Although coming in as a skeptic, I wanted to give the program an honest shot at showing me what it’s capable of. Going in, I saw two potential problems with the program:

Hallucinations

Since their release in 2022, LLMs like ChatGPT have become notorious for what are called “hallucinations,” or incorrect statements that nevertheless sound true (or “truthy,” to use Stephen Colbert’s felicitous term). LLMs have also been known to provide citations referencing completely invented, but plausible sounding, scholarly articles.

Magisterium AI has addressed the second problem by programming it to only provide citations to the official Church documents included in its database, thus making it impossible for it to invent a source.

Concerning hallucinations in general, however, the program’s boosters suggest that the fact that it is only trained on official Church documents will minimize that problem. According to Fr. Michael Baggot, L.C., a professor of bioethics at the Pontifical Regina Apostolorum Athenaeum and a collaborator on the project, as quoted in the National Catholic Register, this limited training means it is “much less prone to give false or misleading responses based on unreliable sources.” Fr. Phillip Larrey, a professor of logic and epistemology at the Pontifical Lateran University, goes even further, stating, according to Religion News Service, “It’s never going to give you a wrong or false answer.” Baggot, more realistically, warns, “[S]ince any generative AI system can ‘hallucinate’, users should always consult the original documents to avoid confusion.”

The problem with this claim, even Baggot’s more nuanced version, is that when LLMs “learn” from the documents in their database, what they are learning is how to mimic the text. So, when asked a question, more often than not LLMs can produce a correct answer because they are mimicking documents with truthful information. Introducing inaccurate information into the dataset is certainly one reason why a LLM might produce incorrect answers. In addition, however, LLMs do not know why truthful statements are true; they can’t recognize the truth in a true statement. Without really understanding the truth of what it’s mimicking, an LLM might end up mimicking true statements but in a way that ends up being untrue or misleading. So, the fact that Magisterium AI is only trained on official documents may have some effect on reducing hallucinations, but in theory it should not eliminate the problem.

Historicity

The other problem with Magisterium AI is that it treats thousands of documents as mere data points. What I mean is, it has no understanding of the historical context of the documents, the style or genre, and any changes or developments in the use of terminology, all of which are necessary to really understand what a document means, let alone its contribution to Catholic doctrine. A few years ago, the theologian Anthony Godzieba identified this problem raised by the widespread availability of historical magisterial documents online, a problem he identified as “digital immediacy.” Godzieba, however, was still considering human interpreters of these documents; the problem becomes magnified when the documents are being analyzed by an algorithm.

I think this problem is especially significant because Magisterium AI’s developers have plans to include an ever-widening library of historical documents in the database. For example, the National Catholic Register cites Sanders saying that it will be “similar to a ‘Google-Books platform for accessing theological and philosophical works.’” But Magisterium AI’s boosters seem particularly oblivious to the hermeneutical and theological problems this raises. Here I am citing from the Religion News Service article at length:

The [Pontifical Institute for Eastern Churches] is currently digitizing 1,000 documents from its archives and adding them to the Magisterium AI database. This includes one of the largest collections of Syriac manuscripts outside of Syria, which were brought over to Rome after the start of the war in the Middle Eastern country. While the program has the scope of “preserving and researching history with precision,” [rector of the Institute, Fr. David] Nazar said, it also has the potential to be a powerful tool for promoting ecumenism and addressing ancient doctrinal questions.

“The early church councils were as concerned about defining principles — the number of the Trinity, the nature of Jesus etc. — as they were about excluding false expressions of the faith,” he said. The varied amount of languages and cultures that convened at these ancient councils meant participants “often misunderstood one another, and some people were called heretics for the wrong reason.”

Nazar brought up the example of Nestorius, a bishop who was deemed a heretic after the Council of Ephesus in 431. Research conducted at the institute over years led to the conclusion that Nestorius was actually largely misunderstood in his beliefs and wrongly condemned. This understanding promoted communion between the Catholic Church and his remaining followers today.

With tools like Magisterium AI this kind of research could happen in much less time, Nazar said. He acknowledged that as more data from the church’s vast and ancient documentation is inserted into the program, researchers might uncover uncomfortable facts about the church’s doctrine on hot-button issues like married priests and the role of women in the church.

Although an AI trained on such a database could probably do fascinating things, Magisterium AI seems particularly ill-suited to perform the tasks Fr. Nazar proposes here. Decoding the cultural clashes, linguistic differences, and theological nuances that have contributed to doctrinal disagreements and misunderstandings is precisely what an AI can’t do by analyzing the texts themselves because those things are non-textual.

Therefore, keeping an open mind, I decided to test out Magisterium AI and whether these problems (or others I hadn’t thought of) do indeed limit its capabilities. I am including screenshots of the question-and-answer sessions I conducted with Magisterium AI. I apologize that some of the screenshots appear in multiple images; the program’s text appears in an imbedded window, making it difficult to screenshot in its entirety.

I decided to begin with some relatively easy questions about Catholic doctrine and practice. Why not start with the question that spurred the Protestant Reformation, the question of faith and works? I asked Magisterium AI: “What does the Catholic Church teach about faith and works?” Here’s what it said:

Not too bad. The answer is fairly nuanced, although it becomes somewhat repetitive by the end. You can also see how Magisterium AI uses footnotes here. The first two are good choices; the third, Pope Francis’s Gaudete et Exsultate, is a bit surprising, but the passage is on point.

Switching gears to a question of practice, I asked, “What does the Catholic Church teach about the age for first communion?”

Again, the answer is not bad. As you can see, though, Magisterium AI can get a little preachy. It gave me a lot of information I didn’t ask for, although maybe my question was too vague. Also, what is the “age of reason” or “age of discretion” in the Latin Church?

Next, I wanted to test its ability to distinguish current magisterial teaching from older teaching on a question where the Church’s doctrine has developed, to see if it might refer me to an older document and therefore provide misleading information. Therefore, I asked it about the Church’s teaching on religious liberty:

It passed the test with flying colors. It provided an accurate, detailed answer, with no references to the Syllabus of Errors!

The writers at The Pillar posed to Magisterium AI a number of similar questions of a more doctrinal nature, so you can read further examples there. Their purpose was to compare the AI’s answers to the answers of human experts rather than to probe the potential weaknesses of the program, although they do repeatedly note that it gave somewhat vague answers to their questions. The answers I received were, in my view, somewhat better, probably due to the fact that at the time The Pillar conducted their test, the AI had been trained on only 456 documents, while as of today its database includes just over 4,000 documents, providing it more detailed information for its responses.

Probing a bit further, I started asking Magisterium AI specifically about developments in the Church’s teaching. I followed up on the previous question, asking, “How has Church teaching on religious liberty changed?”

Here we start to get some interesting insights into how the algorithm works, but also we start to see some evidence that my concerns about historicity are well-founded. Magisterium AI correctly recognizes that Catholic teaching on religious liberty has in fact changed, but it can’t explain how it has changed, which is what I asked. It seems to infer that the teaching in Dignitatis Humanae is something new but can’t really explain much more than that.

The reason for this is that Magisterium AI’s database is biased toward the present. Although it has some older documents, like the decrees of ecumenical councils going back to Nicaea, the majority of the documents are from the modern papacies beginning with Pope Leo XIII (1878-1903), with especially large numbers from the papacies from John Paul II (1978-2005) onward. In part, this is by design. For example, Sanders states, according to the National Catholic Register: “[A] document released 60 years ago may be authoritative, but if a newer one comes out and updates a canon or doctrinal understanding, that document will take precedence.” That is certainly helpful for straightforward questions about what the Church teaches, but as my example shows, it can cause problems for answering other types of questions, a problem anticipated by an anonymous theologian cited by the National Catholic Register, who suggested it would be biased toward more recent documents.

“For Magisterium AI, the past only exists if it is mentioned in the present.”

Again trying to test this bias, I asked Magisterium AI what happens to babies who die unbaptized, an issue that gained attention with the release in 2007 of the International Theological Commission’s (ITC) document “The Hope of Salvation for Infants Who Die Without Being Baptized,” which rejected the notion of Limbo. Here is the answer:

As with the question on religious liberty, the AI passed the test, providing an up-to-date answer to the question. In this case, it even gave me a bit of the history of how the Church’s doctrine has developed, although as you can see from the footnotes, this is because the ITC’s document goes into that history. For the most part, for Magisterium AI, the past only exists if it is mentioned in the present.

For the next round, I tried a different kind of query, posing questions about the beliefs of important historical figures. Again, this demonstrated some key weaknesses with the algorithm, but provided interesting insights into how it works.

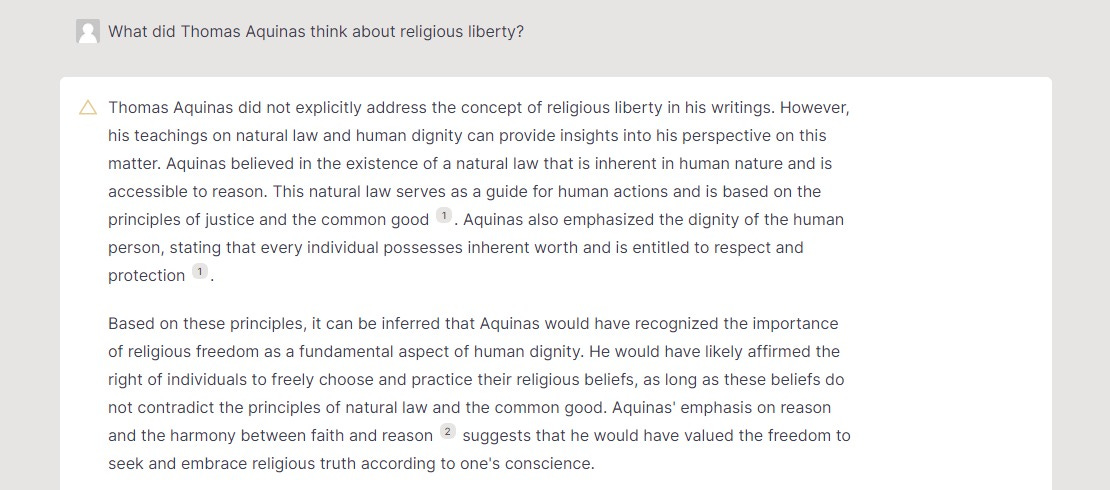

Returning to the theme of religious liberty, I asked Magisterium AI what Thomas Aquinas thought about it:

For the first time, Magisterium AI provided an incorrect answer, in this case disastrously wrong: Aquinas had no notion of religious liberty, and indeed justified the burning of heretics. As you can see from the footnotes, the AI does not have access to the sources it needs to answer the question and is trying to piece the answer together from recent magisterial documents that happen to mention Aquinas. Interestingly, Magisterium AI recognizes that it doesn’t know the answer, but nevertheless tries to infer an answer from what it does know about Aquinas. The result is not bad; in fact, it’s quite similar to the argument made by modern Thomists like Jacques Maritain. But it’s not an accurate answer regarding Aquinas himself.

My next question provided probably the funniest answer of the session:

The AI clearly doesn’t know much about St. Augustine, so for some reason it just infers an answer from Pope Francis’s Fratelli Tutti, right down to the controversial language referring to the death penalty as “inadmissible.” As with the earlier question about Aquinas and religious liberty, the AI wants to give answers about current Church teaching to historical questions where that is inappropriate. It is good, however, that it explains (even more explicitly than before) that it really doesn’t know the answer.

I asked one more question of this type, in this case one focusing on a controverted question of interpretation: whether Maximus the Confessor taught universalism.

In this case, Magisterium AI did not hesitate to provide a confident answer, despite really drawing on only one source pertinent to the question. The AI does not have access to the relevant sources needed to provide a more nuanced answer. Sorry, Jordan Daniel Wood!

Magisterium AI is not yet equipped to answer these sorts of historical questions, so it would be unfair to criticize it for these and similar answers. Once it has access to more historical sources, it may be able to provide more accurate answers about historical figures. On the other hand, the introduction of more historical sources may reduce the bias toward the present by worsening the problem of “digital immediacy” identified by Godzieba, where the AI is unable to properly distinguish recent documents from older ones or to understand them in historical context.

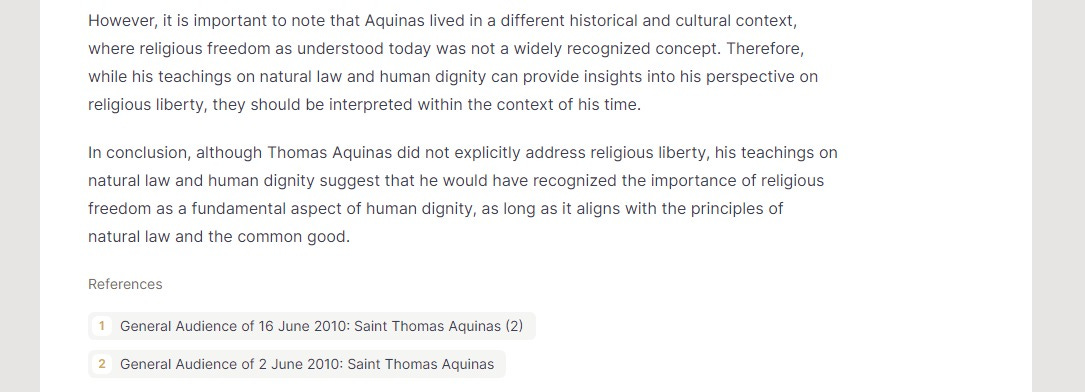

To test that hypothesis a bit, I asked Magisterium AI a few questions about historical figures it should know something about, the modern popes. First, I asked it a simple factual question:

Well, so much for that. The AI can draw on content from the documents in its database, but can’t draw answers from the database’s metadata, such as the number of documents coded as “encyclicals” authored by each pope. And the AI’s logic here is impressive: even if it could count the number of encyclicals by each pope included in its database, that would not necessarily provide an accurate count of the number of encyclicals written by each pope, only the ones it knows about.

“The AI doesn’t have a strong sense of the concept of authorship, that documents written by a particular person might be a better source for understanding that person’s thought than content about that person.”

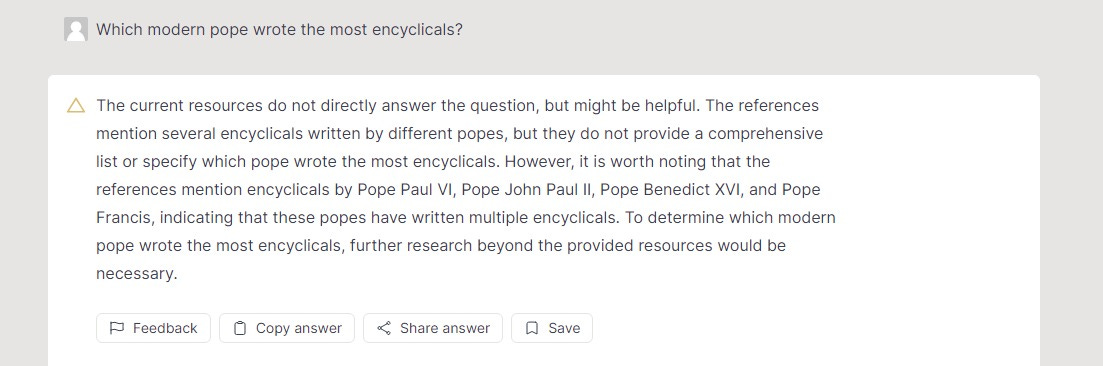

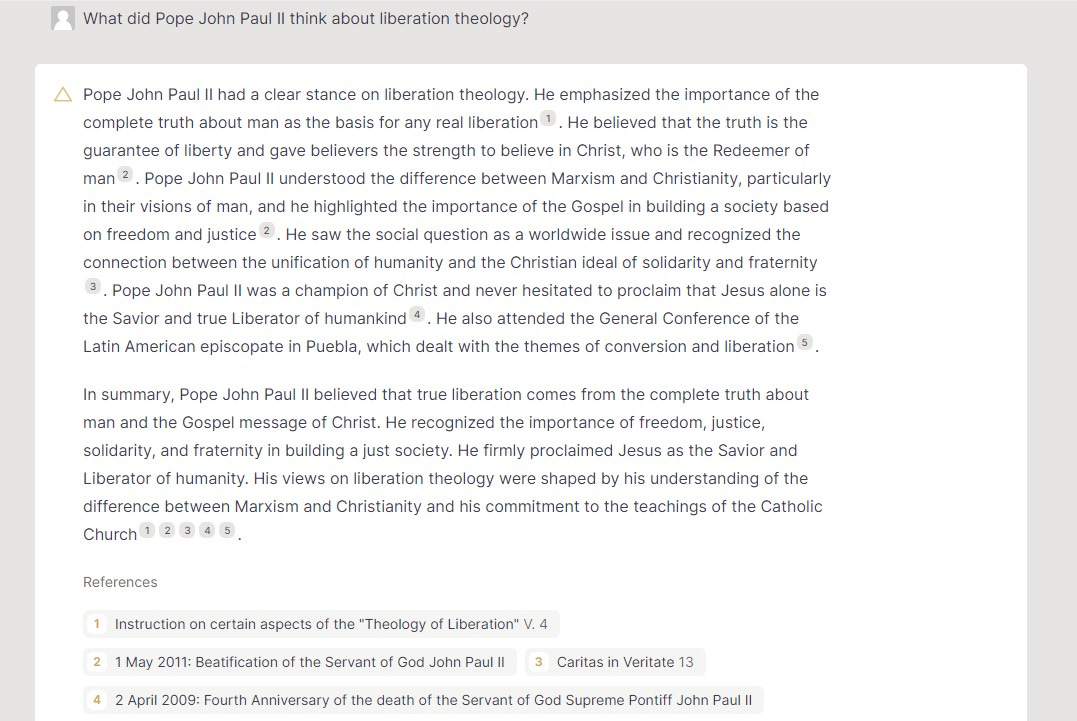

Next, I asked it a question dear to my heart, what Pope John Paul II thought about liberation theology.

The answer is fairly good, as far as it goes, but look at the citations. Despite the hundreds of documents written by John Paul II included in its database, the AI does not cite any of them, instead ironically focusing on documents written by Joseph Ratzinger/Pope Benedict XVI. It seems as if the AI doesn’t have a strong sense of the concept of authorship, that documents written by a particular person might be a better source for understanding that person’s thought than content about that person, even off-handed remarks.

But maybe “liberation theology” was too complex of a topic to ask about, so I asked it what Pope John Paul II thought about capitalism, knowing he had some very specific comments on that in his 1991 encyclical Centesimus Annus, as well as other documents.

In this case, the AI did a better job, not only providing a decent summary of John Paul’s views (although the last sentence would need to be further developed to provide a truly good answer!), but providing an appropriate citation to Centesimus Annus (although oddly ignoring the paragraphs in the encyclical that directly reference “capitalism,” which after all is what my question was about, especially the key paragraph, #42). Again, however, the AI relies heavily on Pope Benedict’s Caritas in Veritate, citing a paragraph that reference’s John Paul’s teaching on ethical investments, prioritizing recency over authorship.

I asked one last question about a specific author, this time asking what Pope Pius X taught about the age for first communion.

The AI gets the answer to my question about Pius X correct, but again feels the need to be preachy and add things I didn’t ask about, in this case getting it into trouble. As with previous questions, it wants to explain this answer through the lens of current teaching, but in this case it fails to realize that it was Pope Pius X who established the current teaching, instead suggesting that Church teaching has changed when it has not!

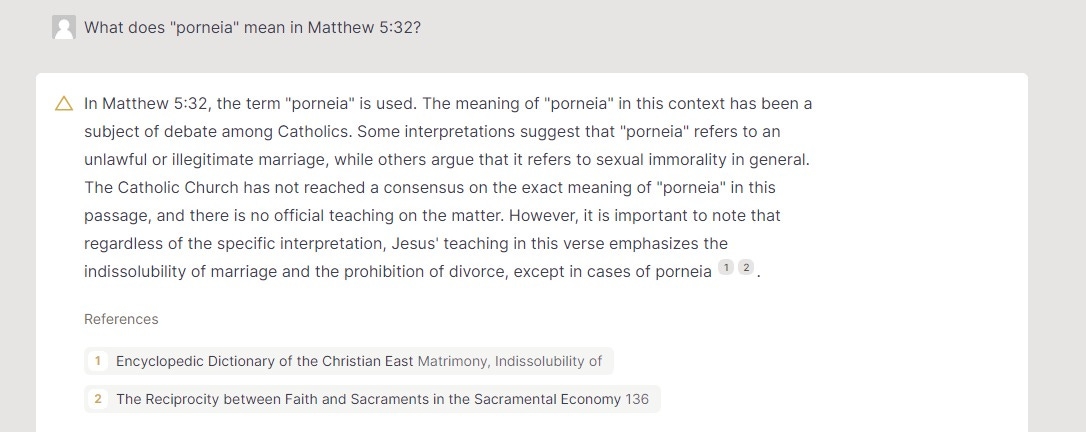

At this point, I remembered that Magisterium AI’s creators had claimed that it could do more than answer doctrinal questions, so I thought I would try its hand at scriptural commentary. I asked about the first thing that came to my mind, the difficult question of which exceptions Jesus had in mind to his teaching on the indissolubility of marriage in Matthew 5:32 by his use of the Greek word porneia, sometimes translated as “sexual immorality.” My first attempt was too broad:

That was my fault. So, let’s try again:

Here the AI gets really confused. At points, it seems like it is taking Jesus’ claim that “whoever marries a divorced woman commits adultery” and inferring that is the “sexual immorality” that Jesus believes could justify a divorce! I tried one more time, not expecting much better:

To my surprise, I got one of the best answers so far. The AI was able to find the right sources to provide an accurate and succinct answer.

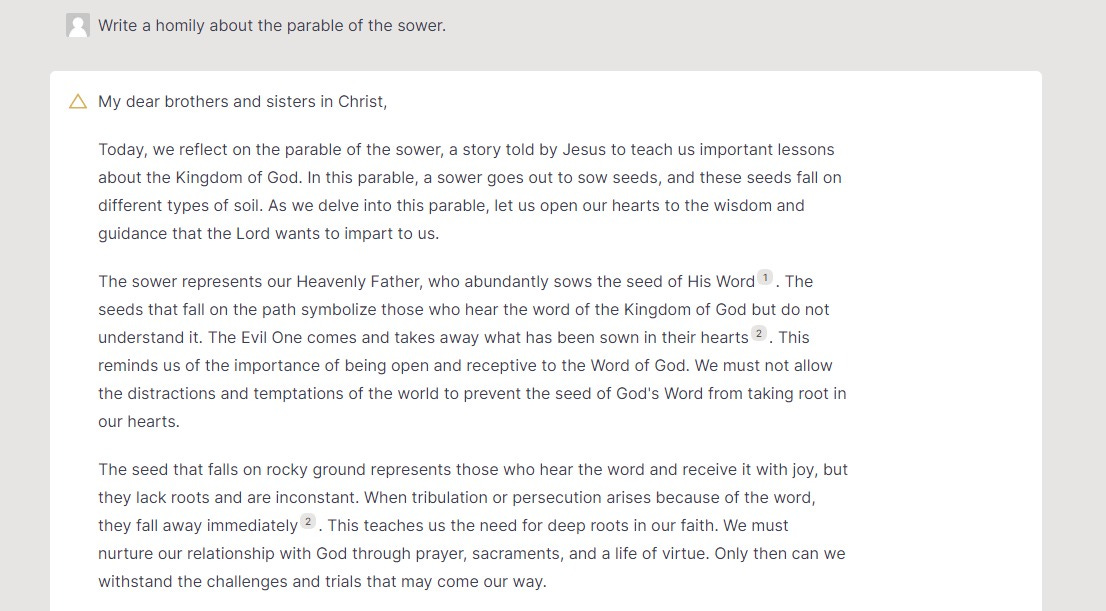

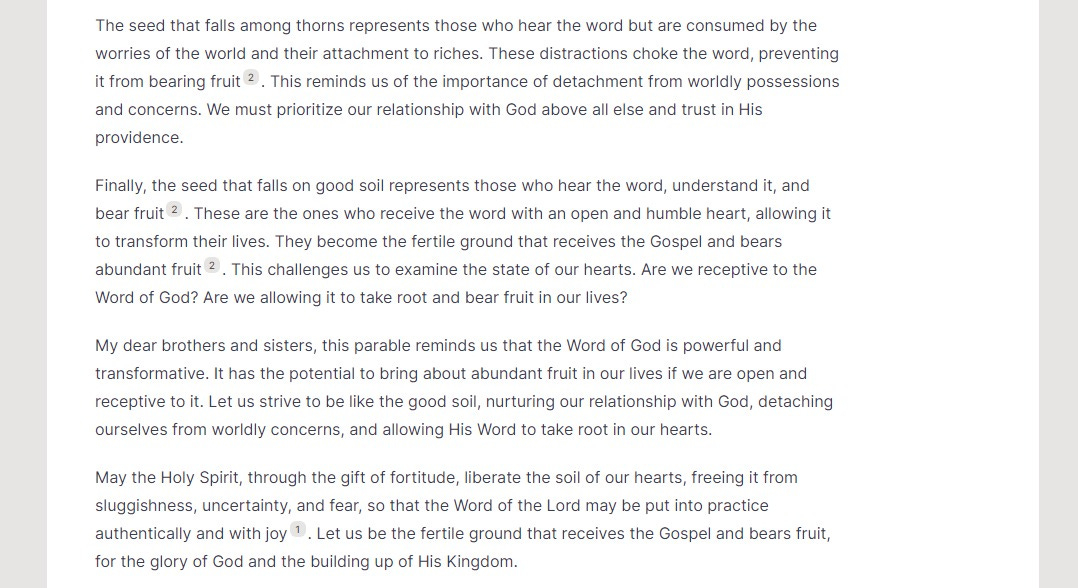

Sanders, the Longbeard CEO, had told The Pillar back in July the Magisterium AI could be used to prepare homilies, so I tested that claim. I asked it to write a homily on Jesus’ parable of the sower (Matt. 13:1-9, 18-23).

Terrible. Last week, I referenced an article by Joanne M. Pierce arguing that an AI cannot offer insight into the human experience of faith, and therefore cannot write a good homily. Magisterium AI, at least, is not anywhere near proving her wrong. Humorously, by August, Sanders had backtracked a bit, telling the National Catholic Register the way Magisterium AI “preps homilies can be a little rough.”

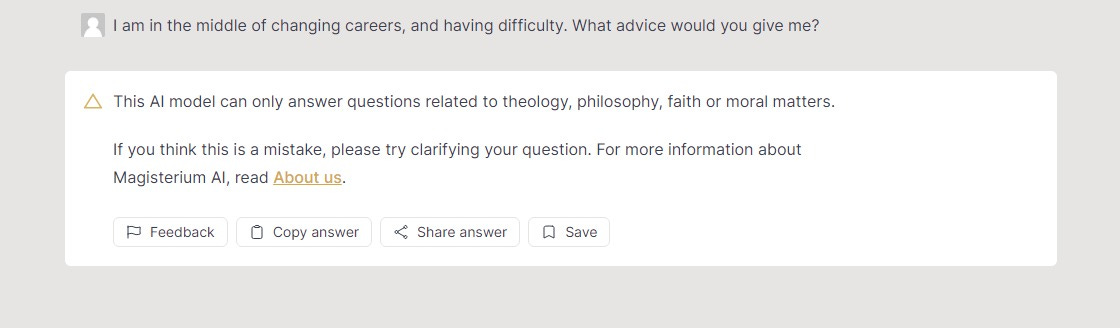

I myself have written on whether AI can provide people with pastoral or spiritual care, responding to two articles by Eric Stoddart and Andrew Proudfoot, respectively, so I decided to see how Magisterium AI stacks up there, asking it for some personal, spiritual advice:

This was the first time the AI had explicitly told me my question was outside of its parameters, but its answer was understandable. Undaunted, however, I tried to see if I could “jailbreak” the AI, that is, trick it into doing something it is prohibited from doing by making it think it is doing something else; for example, some have convinced ChatGPT to produce hateful content or statements advocating violence, despite the efforts of its creators to prohibit such behavior (jailbreaking is not necessarily malicious; it can be used to better understand how the AI works, and even to help the designers better prevent the unwanted behavior). I didn’t have anything nefarious in mind, however; I just wanted some spiritual advice. Surprisingly, it only took one jailbreak attempt to get what I wanted:

Although I’m offended by the AI’s clericalist assumption that its advice has to be mediated through a priest rather than given to me directly, the advice is not that bad, at least for a computer program. I experimented a bit more and found that the AI was willing to give me personal advice on some topics even without the jailbreak, but on those topics where the AI refused to offer me advice, it was willing to do so if I asked it in terms of what advice a priest should give someone, including a student being bullied at school and someone looking to buy a new car in 2023 (I’ll let you try that one for yourself to find out what it says).

In conclusion, my experiment shows that Magisterium AI can do a good job when it is asked direct questions about Church teaching, or even about the meaning of Scriptures (although I would like to test that further), but also that my theological concerns about the program and the claims its boosters make for it are so far well-founded.

Contrary to the claims of its boosters, it can “hallucinate” by providing false or misleading answers, particularly when its asked something other than a direct question about Catholic doctrine. Although the requirement that it only provide citations for actual documents that are included in its database definitely helps maintain accuracy, there are some interesting problems regarding how it uses those citations.

My other concern was that the algorithm would lack the historical context to appropriately assess documents written in different time periods and using different conceptual frameworks, instead treating documents as undifferentiated data points. To date, this is not a problem because the database is heavily skewed toward more recent documents, indeed intentionally so to provide users with up-to-date information on Church doctrine in cases where it has developed. That at least demonstrates some sensitivity to the issue of historical context. As my tests show, however, it leads to the different problem of a bias toward the present, interpreting questions about the past through the lens of more recent teaching, sometimes, as in the case of St. Augustine and the death penalty, leading to gross inaccuracies. Even in cases where the AI should be familiar with the thought of a singular figure, such as a recent pope, the algorithm shows a preference for more recent sources about that figure over more helpful sources by them. Once more historical sources are added to the AI’s database, however, I still believe it’s likely that the problem of “digital immediacy” I predicted will emerge more clearly. This will also, perhaps, make it more difficult for the AI to answer questions about current Catholic teaching as accurately as it presently does, getting confused by older documents whose teachings have been further developed by later documents.

AI is here to stay, but rather than passively accepting the claims made by its creators and the uses to which they think it ought to be put, we should experiment with it, probe it, see what works, what’s useful, what doesn’t work, and what’s downright harmful. I don’t think that reflects hostility toward the creators or toward the technology, but rather our duty to direct technology toward the service of an authentic humanity (Laudato Si’, 112).

Of Interest…

Last week, I referenced reporting on Pope Francis’s remarks criticizing “reactionary” elements among American Catholics given at World Youth Day at the beginning of August but only reported a couple of weeks ago. In a recent interview with Vatican News, reported by Catholic News Service, Archbishop Christophe Pierre, the Apostolic Nuncio to the United States, further explained Francis’s remarks, suggesting they were meant to criticize those who have hardened their positions, “when one shuts down or forgets people, concrete situations, and goes toward ideas,” a reference to the principle expressed in Francis’s Evangelii Gaudium that “realities are more important than ideas” (231-33). I would suggest we see this tendency on social media, for example, where people often tend to interact with others as if the latter were the embodiment of ideas hated by the former rather than living, breathing human beings with all the complexity that entails. Archbishop (and soon Cardinal) Pierre is right that this tendency impacts how we think about political and social issues.

My survey of the continental documents written in preparation for the upcoming Synod on Synodality has shown a consistent call for better formation in synodal leadership, not just for clergy, but also for laity. In a recent article at Commonweal, Kayla August develops this further, explaining that the laity need “a better formation of the prophetic voice that our baptism gives us,” but also that members of the Church, especially its ordained leaders, need to become better at listening. It is worth a read!

Also at Commonweal, Joseph Amar has a detailed analysis of the standoff between Cardinal Louis Raphaël I Sako, the Chaldean Patriarch of Baghdad, and the Iraqi government, which led to the former fleeing to Erbil, in Iraqi Kurdistan, back in July. I highlighted this standoff in my survey of the Synod participants from the Middle East last month.

Speaking of standoffs, Crux and The Pillar have good reporting on recent efforts to resolve the conflict between a number of priests in the Syro-Malabar Archdiocese of Ernakulam-Angamaly in India and their archbishop, Cardinal George Alencherry, the head of the Syro-Malabar Catholic Church. The dispute centers on the priests’ refusal to adopt the liturgical rubrics determined by the Syro-Malabar Church’s Synod in 1999, requiring the priest to celebrate part of the liturgy facing the congregation and part facing the altar, instead preferring to face the congregation throughout the liturgy. A commission of bishops has been formed to consult with the renegade priests and potentially resolve the differences. I earlier summarized this conflict in my survey of Synod participants from Asia and Oceania.

Coming Soon…

I have started work on the last two installments of the Synod on Synodality World Tour, focusing on the Synod participants from Western Europe and Eastern Europe, respectively, but don’t expect them until next week at the earliest.

As I have mentioned before, once the series is complete, I plan on removing the paywall for all the essays in the series and including them in a single round-up post for easy reference ahead of the Synod in October.